Survivorship Bias

Last modified: December 19, 2019

What is Survivorship Bias?

Survivorship bias is the tendency to draw conclusions based on things that have survived, some selection process, and to ignore things that did not survive. It is a cognitive bias and is a form of selection bias.

There are two main ways people reach erroneous conclusions through survivorship bias – inferring a norm and inferring causality.

Inferring a norm

The things that survived a process are the only things that ever existed

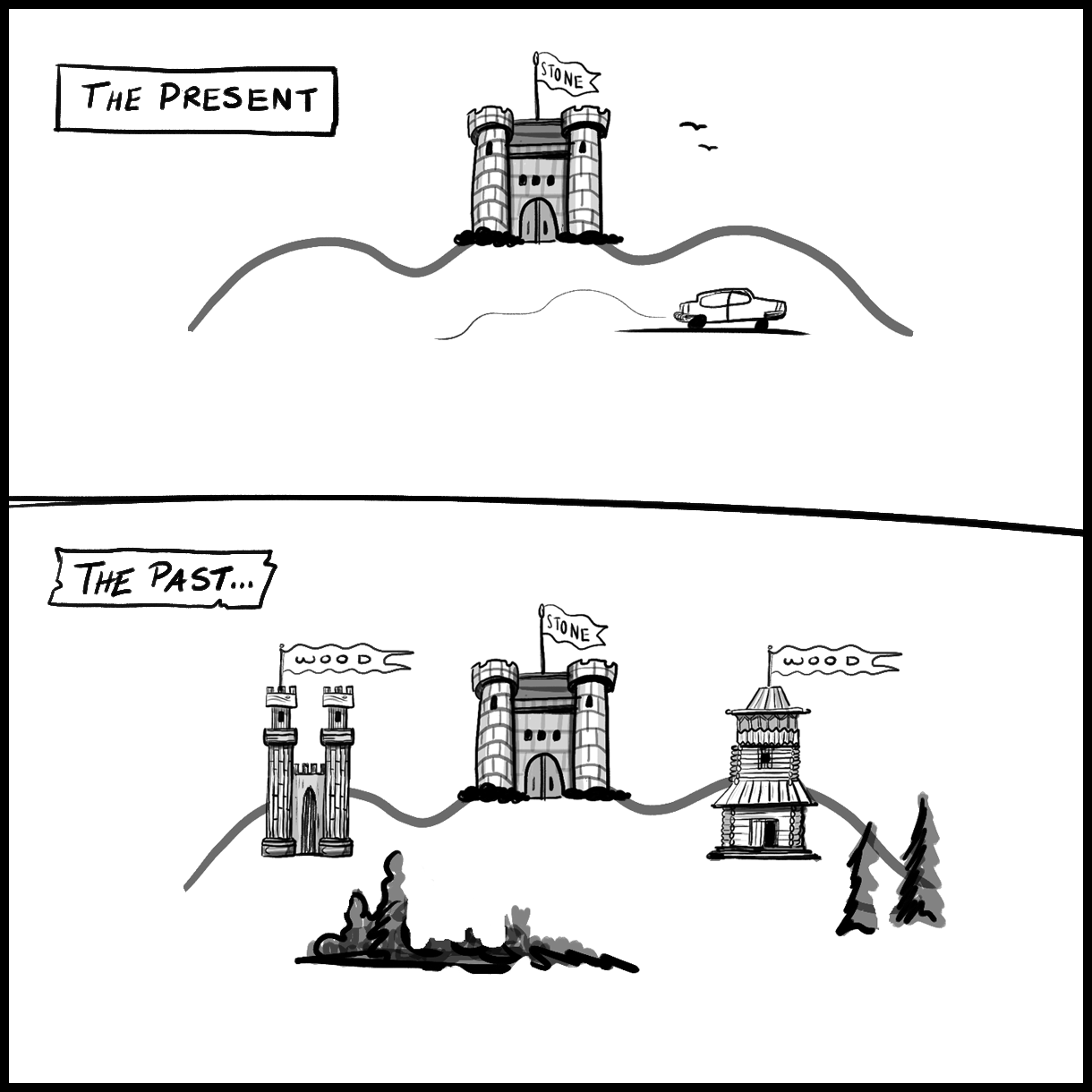

Example:

“Most castles were made of stone” vs “most castles were made of wood but were destroyed by fire or withered away over time.”

Present

All we see is the surviving stone castle.

Past

In the past we see the same stone castle but also the more numerous wooden castles. The wood castles did not survive to the present.

People assume that what they see or have concrete evidence of in the present are the only things that have ever existed. When in fact most of the things that have existed in the past do not exist in the present.

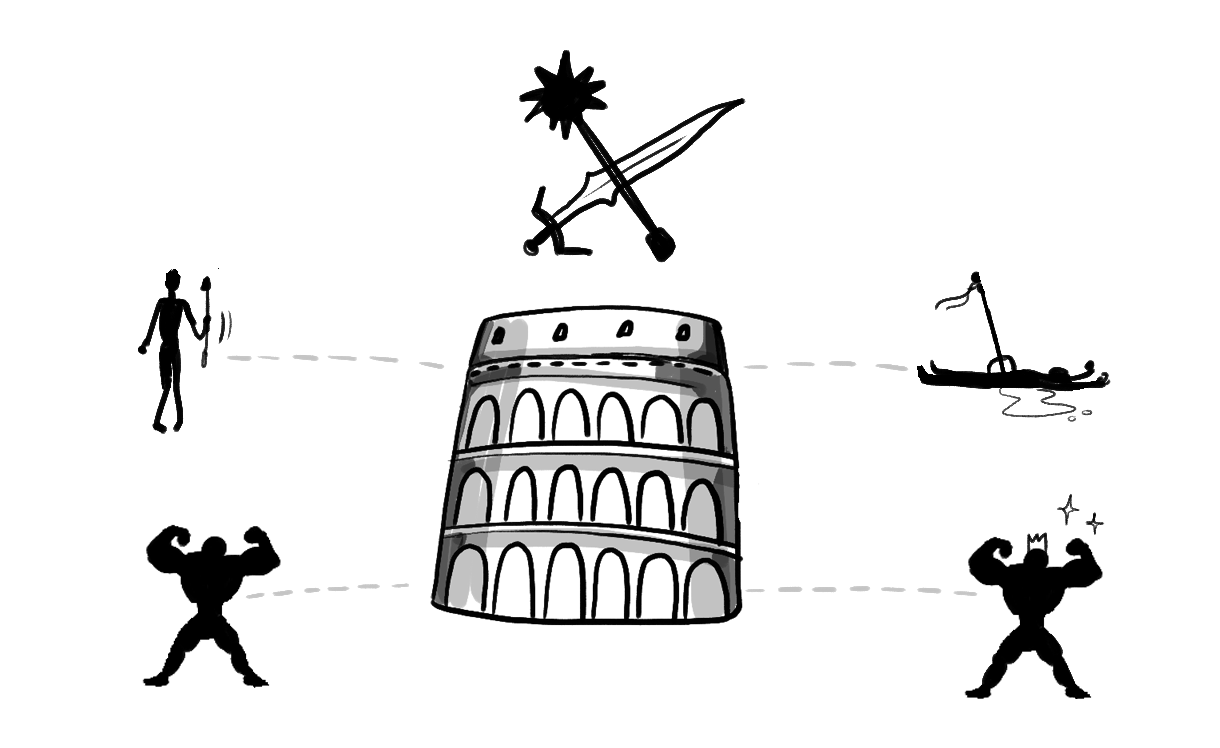

Inferring causality

Anything that survived a process was impacted by that process.

Example:

“Men get tough fighting in the coliseum” vs “only tough men survive the coliseum.”

People assume the competition in the coliseum caused the outcome but actually it filtered out the weak people, and the strong people survived. People did not necessarily grow from their experience in the coliseum so much as it exposed who was the most fit for the coliseum.

Survivorship Bias in Business

These biased ways of interpreting events and data is common in business. Let’s imagine you work at a business intelligence software company with a two-week free trial period that just launched.

After one week, in the middle of the trial, you only have a few people still active. Let’s say everyone who is still engaging on your site is a data analyst. The data analyst users are creating progressively more complicated analyses in your BI tool.

What conclusions could you draw?

- My BI tool resonates with data analysts

- My BI tool empowers deep analysis

Why might these conclusions be wrong?

My BI tool resonates with data analysts (inferring a norm)

Without examining the people who stopped using the tool, we do not know if that group of people in the trial had data analysts in it as well. Let’s say everyone that started the trial was a data analyst and you had more people give up than keep engaging. This would directly contradict your previous conclusion.

We need to do more investigative work to find out about those that did not survive the free trial process before drawing conclusions. Just because all of your engaged users are data analysts does not mean your product resonates with all data analysts.

How to reach a more informed conclusion:

Analyze everyone who started the trial to find patterns that truly separate cohorts. Maybe there is something unique about those who engaged and those that did not continue the trial, but we must look at the non survivors before inferring a norm.

My BI tool empowers deep analysis (inferring causality)

Right now, you are not comparing your users’ capabilities against a control. They may be doing the most advanced thing in your BI tool, but they might be capable of even more advanced analysis when using other BI tools. They might be very skilled analysts and succeeding in spite of your design, not because of it.

How to reach a more informed conclusion

Do a pre-assessment of users’ skill level to see if your product/features are actually adding value. Run tests of people performing similar tasks with the tool(s) they currently use to accomplish this analysis. These tests become a great PR piece for your product or feature if you can cite a positive benefit: “Users complete BI analysis 2 times faster on our tool than our leading competitor’s tool”.

Summary

- Analyze the full cohort, not just the users who are still engaging after two weeks.

- Compare user behavior with competitor tools to judge the impact of your product.

- Conduct pre-assessments to judge current skill levels.

Written by:

Matt David

Reviewed by:

Twange Kasoma

,

Mike Yi

,

Blake Barnhill

,

Matthew Layne